Editor's note: MobiHealthNews spoke with two experts about whether GPT-5 should be regulated or comply with HIPAA, and the broader implications of AI in healthcare. This is part one of a two-part series.

OpenAI CEO Sam Altman announced the release of GPT-5 earlier this month, stating the AI platform should be used for healthcare and touting that it can help individuals navigate their healthcare journey.

In January, Altman joined President Donald Trump, Oracle's Chief Technology Officer Larry Ellison and SoftBank CEO Masayoshi Son to announce Project Stargate, a $500 billion investment to build the physical and virtual infrastructure to power AI construction, with a primary focus on healthcare.

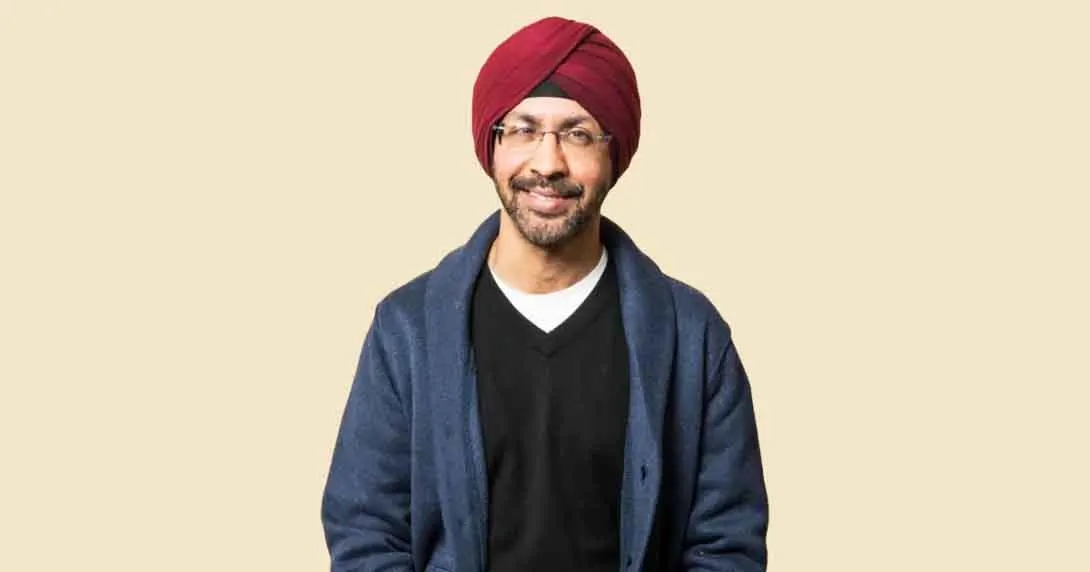

Lee Kim, senior principal of cybersecurity and privacy at MobiHealthNews' parent company, the Healthcare Information and Management Systems Society (HIMSS), sat down with MHN to discuss when HIPAA applies to GPT-5, the promise and risks of AI in healthcare, and why President Donald Trump's Project Stargate must prioritize patient safety alongside infrastructure.

MobiHealthNews: Do you think GPT-5 should be HIPAA compliant?

Lee Kim: If a person shares their own health information with GPT-5 on their own, HIPAA does not apply because they are not a covered entity or acting for one. But if a doctor uses GPT-5 to process the patient's health information – or tells the patient to use GPT-5 and provides the access – HIPAA applies, because the doctor is responsible for safeguarding that data.

HIPAA only applies if the person or organization handling the information is a regulated entity – a covered entity, business associate or part of an organized healthcare arrangement.

MHN: Artificial intelligence is progressing so quickly. Is there any aspect of AI technology that makes you cautious about its use in healthcare?

Kim: AI innovation marks a significant advancement in the state of the art. It allows us to solve problems faster and address complex challenges in healthcare.

AI-enabled patient education can empower patients with clearer, more effective informed consent. It can accelerate the discovery and improvement of drugs and biologics. By harnessing data, AI can help us see the whole patient, providing insights and solutions that we might never uncover on our own.

But to achieve this, we must ensure that data is kept private and secure, disclosed and used only as appropriate. A well-functioning healthcare system depends on robust cybersecurity. You cannot do business or clinical work without it.

MHN: Earlier this year, President Trump announced Project Stargate. What are your thoughts on that endeavor?

Kim: This project aligns with [The White House AI Action Plan] Pillar II's call for robust AI infrastructure, expanded energy generation and resilient systems to meet unprecedented compute demands – particularly in healthcare, where AI workloads span diagnostics, precision medicine, clinical research and population health.

But in healthcare, infrastructure alone is not enough. Patient safety and care require AI that is safe, secure and resilient, with cybersecurity built in from the start to protect against adversarial risks like data poisoning or manipulated inputs.

Protect the patient, protect the data and use AI to make smarter use of the data.